It is a truism that one only notices certain things when they break. An encounter with some error can expose momentarily the chaotic technical manifold that lies hidden below the surface when our devices function smoothly, and a little investigation reveals social forces at work in that manifold. For the history of capitalist society is caked in the layers of its technical infrastructure, and one need only scratch the surface of the most banal of technologies to discover that in them are fossilized the actions and decisions of institutions and individuals, companies and states. If our everyday interactions are quietly mediated by thousands of technical protocols, each of these had to be painstakingly constructed and negotiated by the sorts of interested parties that predominate in a world of capital accumulation and inter-state rivalry. Many persist as the outcome of survival struggles with others that proved less ‘fit’, or less fortunate, and which represent paths not taken in technical history.

In this sense, technical interrogation can cross over into a certain kind of social demystification; if the reader will tolerate a little technical excursus, we will thus find our way to terrain more comfortable to NLR readers. A string of apparently meaningless characters which render nonsensical an author’s name is the clue that will lead us here towards the social history of technology. That name bears a very normal character – an apostrophe – but it appears here as &039;. Why? In the ASCII text-encoding standard, the apostrophe is the 39th character. On the web, the enclosure of a string of characters between an ampersand and a semicolon is a very explicit way of using some characters to encode another if you aren’t confident that the latter will be interpreted correctly; it’s called an ‘HTML entity’ – thus &039; is a sort of technically explicit way of specifying a “‘”.

Until relatively recently there was a babel of distinct text encodings competing on the web and beyond, and text frequently ended up being read in error according to an encoding other than that by which it was written, which could have the effect of garbling characters outside the narrow Anglo-centric set defined by ASCII (roughly those that you would find on a standard keyboard in an Anglophone country). There is a technical term for such unwitting mutations: mojibake, from the Japanese 文字化け (文字: ‘character’; 化け: ‘transformation’). In an attempt to pre-empt this sort of problem, or to represent characters beyond the scope of the encoding standard in which they are working, platforms and authors of web content sometimes encode certain characters explicitly as entities. But this can have an unintended consequence: if that ampersand is read as an ampersand, rather than the beginning of a representation of another character, the apparatus of encodings again peeps through the surface of the text, confronting the reader with gibberish.

In large part such problems can be traced back to the limitations of ASCII – the American Standard Code for Information Interchange. This in turn has roots that predate electronic computation; indeed, it is best understood in the context of the longer arc of electrical telecommunications. These have always been premised on encoding and decoding processes which reduce text down to a form more readily transmissible and manipulable by machine. While theoretically possible, direct transmission of individual alphabetic characters, numerals and punctuation marks would have involved contrivances of such complexity they would probably not have passed the prototype stage – somewhat like the early computers that used decimal rather than binary arithmetic.

In the first decades of electrical telegraphy, when Samuel Morse’s code was the reigning standard, skilled manual labour was required both to send and receive, and solidarities formed between far-flung operators – many of whom were women – as 19th Century on-line communities flourished, with workers taking time to chat over the wires when paid-for or official traffic was low. Morse could ebb and flow, speed up and slow down, and vary in fluency and voice just like speech. But in the years after the Franco-Prussian War, French telegrapher Émile Baudot formulated a new text encoding standard which he combined with innovations allowing several telegraph machines to share a single line by forcibly regimenting the telegraphers’ flow into fixed-length intervals – arguably the first digital telecommunications system.

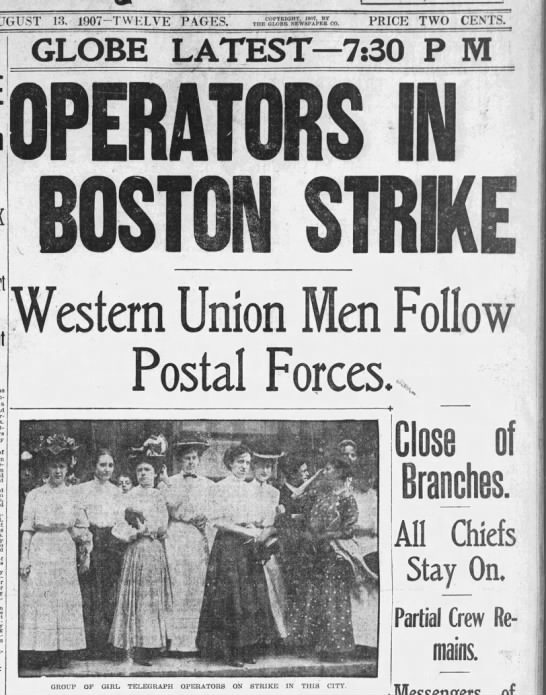

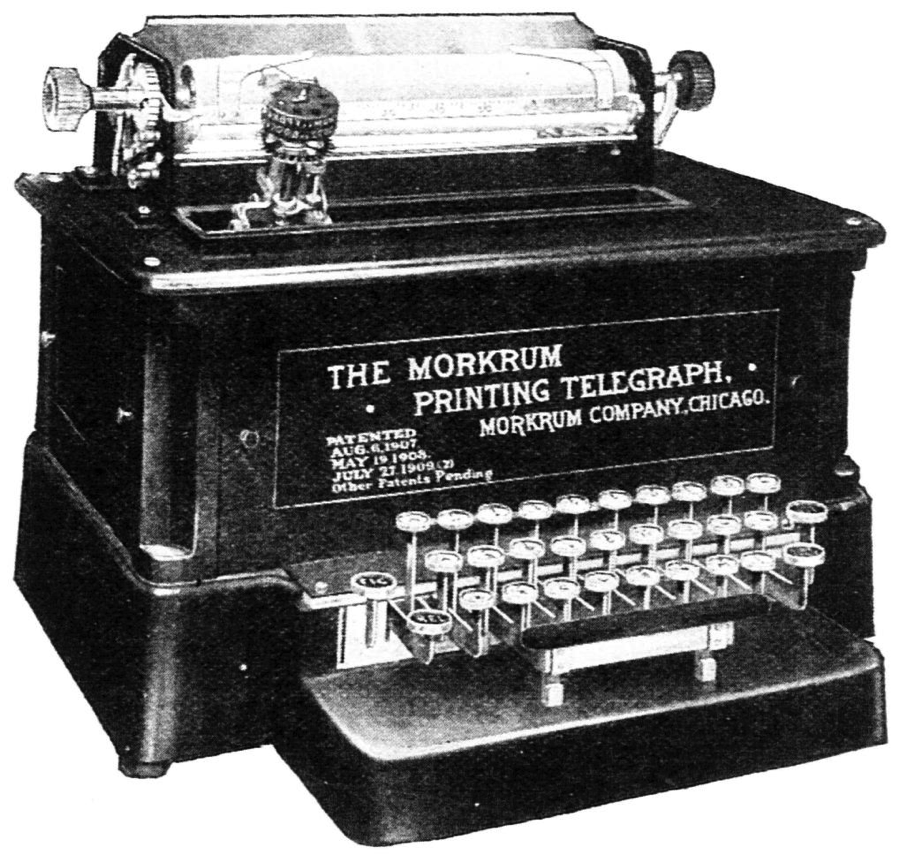

Though Baudot code was taken up by the French Telegraph Administration as early as 1875, it was not put to use so readily elsewhere. In the United States, the telegraphers were still gradually forming into powerful unions, and in 1907 telecommunications across the whole of North America were interrupted by strikes targeting Western Union, which included demands over unequal pay for women and sexual harassment. A year later, the Morkrum Company’s first Printing Telegraph appeared, automating the encoding and decoding of Baudot code via a typewriter-like interface. Whereas Morse had demanded dexterity and fluency in human operators, Baudot’s system of fixed intervals was more easily translated into the operations of a machine, and it was now becoming a de facto global standard.

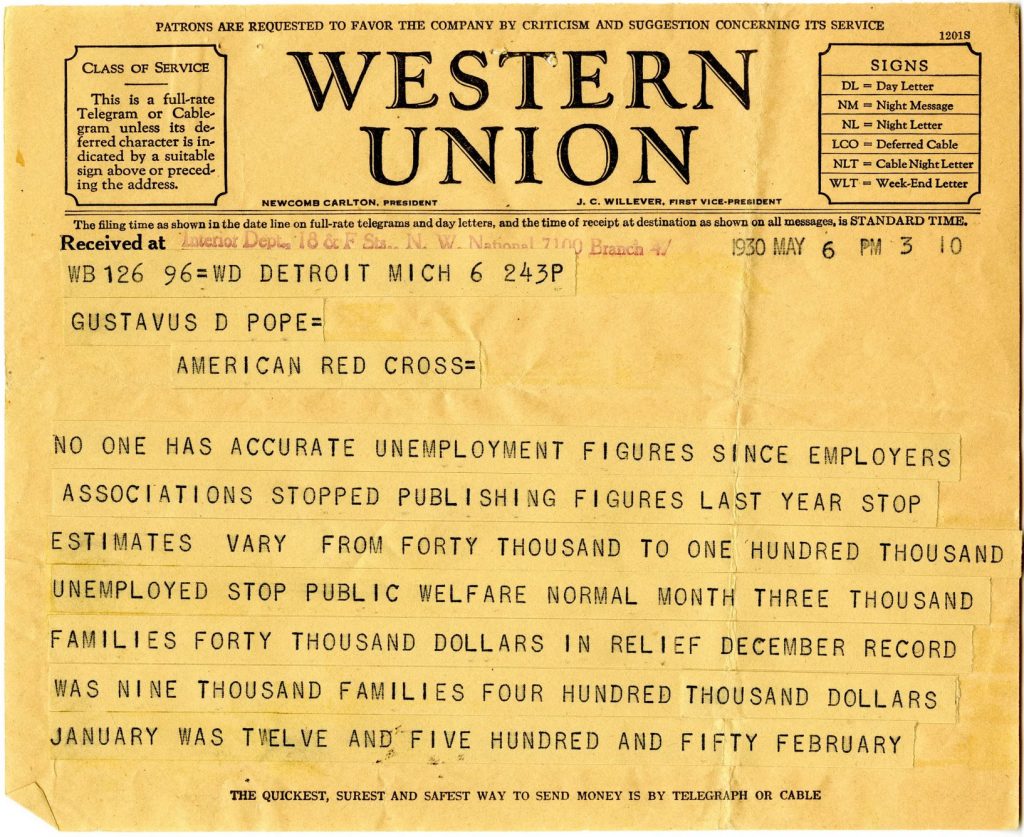

In 1924, Western Union’s Baudot-derived system was enshrined by the International Telegraph Union as the basis of a new standard, International Telegraph Alphabet No. 2, which would reign throughout mid-century (ITA1 was the name given retrospectively to the first generation of Baudot code). Though Baudot was easier than Morse to handle automatically, only a limited number of characters could be represented by its five bits, hence the uppercase roman letters that characterized the Western telegraphy of that moment.1 The European version allowed for É – a character that would remain absent from the core Anglo-American standards into the era of the web – but still lacked many of the other characters of European languages. There were some provisions for punctuation using special ‘shift’ characters which – like the shift key of a typewriter – would move the teletype machine into a different character set or encoding. But this shifting between modes was laborious, costly, and error-prone – for if a shift was missed the text would be mangled – pushing cautious senders towards simple use of the roman alphabet, in a way that is still being echoed in the era of the web. A 1928 guide, How to Write Telegrams Properly explained:

This word ‘stop’ may have perplexed you the first time you encountered it in a message. Use of this word in telegraphic communications was greatly increased during the World War, when the Government employed it widely as a precaution against having messages garbled or misunderstood, as a result of the misplacement or emission of the tiny dot or period. Officials felt that the vital orders of the Government must be definite and clear cut, and they therefore used not only the word ‘stop’, to indicate a period, but also adopted the practice of spelling out ‘comma’, ‘colon’, and ‘semi-colon’. The word ‘query’ often was used to indicate a question mark. Of all these, however, ‘stop’ has come into most widespread use, and vaudeville artists and columnists have employed it with humorous effect, certain that the public would understand the allusion in connection with telegrams. It is interesting to note, too, that although the word is obviously English it has come into general use in all languages that are used in telegraphing or cabling.

The Cyrillic alphabet had its own version of Baudot code, but depended on use of a compatible teletype, making for a basic incompatibility with the Anglo-centric international standard. The vast number of Chinese characters of course ruled out direct encoding in such a narrow system; instead numeric codes identifying individual characters would be telegraphed, requiring them to be manually looked up at either end. Japanese was telegraphed using one of its phonetic syllabaries, katakana, though even these 48 characters were more than Baudot could represent in a single character set. Thus technical limitation reinforced the effects of geopolitical hegemony to channel the world’s telecommunications through a system whose first language would always be English.

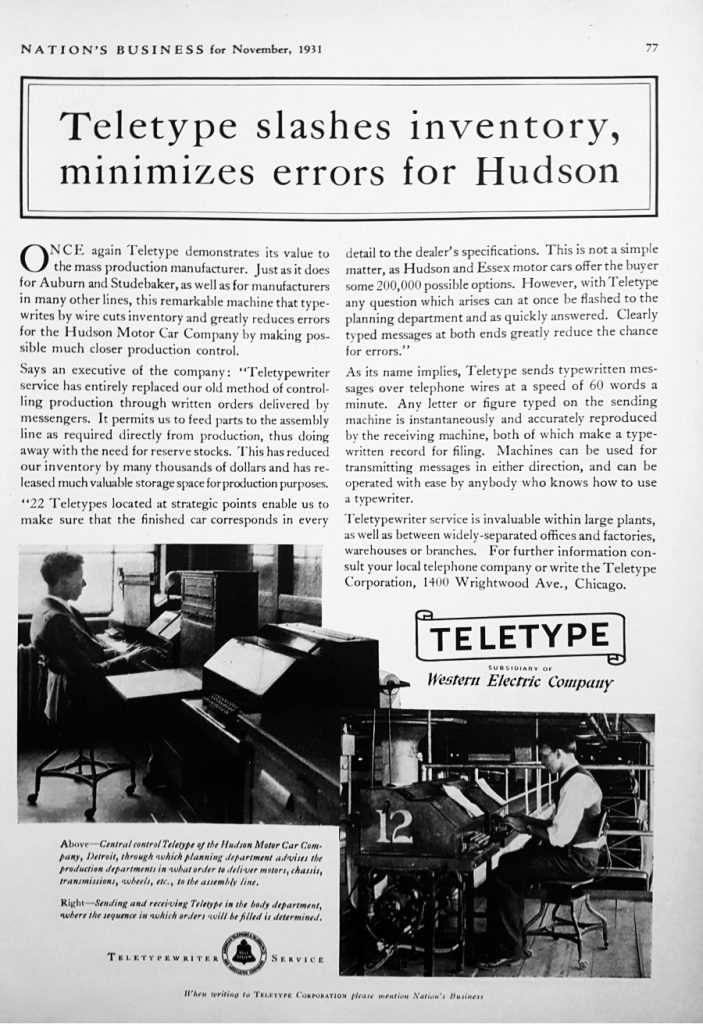

Morkrum’s printing teletypes came to dominate American telecommunications, and after a name change to the Teletype Corporation, in a 1930s acquisition the company was subsumed into the giant Bell monopoly – which itself was to become practically an extension of the 20th Century American state, intimately involved in such things as Cold War missile defence systems. Having partly automated away the work of the telegraphers, in the early 1930s Teletype were already boasting in the business press of their role in facilitating lean production in the auto industry, replacing human messengers with direct communications to the assembly line – managerial missives telecommunicated in all caps, facilitating a tighter control over production.

With the propensity of telegraphic text to end up garbled, particularly when straying beyond the roman alphabet, the authority of those managerial all caps must sometimes have been lost in a blizzard of mojibakes. Communications across national borders – as in business and diplomatic traffic, for example – were always error-prone, and an incorrect character might mess up a stock market transaction. The limitations of Baudot code thus led to various initiatives for the formation of a new standard.

In the early 1960s, Teletype Corporation and IBM, among others, negotiated a new seven-bit standard, which could handle lower case letters and the standard punctuation marks of English. And before these components of the English language, the codes inserted into the beginning of this standard – with its space for a luxurious 127 characters – had a lot to do with the physical operation of particular bits of office equipment which Bell was marketing at the time via its Western Electric subsidiary. Thus while ASCII would carry codes to command a machine to ring a physical bell or control a feed of paper, it had no means of representing the few accented characters of French or Italian. The market unified by such acts of standardization was firstly national and secondarily Anglophone; the characters of other languages were a relatively distant concern.

Alongside these developments in existing telecoms, early computing struggled through its own babble of incompatible encodings. By the early 1960s, as computer networks began to spread beyond their earliest military uses, and with teletype machines now being called upon to act as input/output devices for computers, such problems were becoming more pressing. American ‘tech’ was in large part being driven directly by the Cold War state, and in 1968 it was Lyndon Johnson who made ASCII a national standard, signing a memorandum dictating its use by Federal agencies and departments.

ASCII would remain the dominant standard well into the next century, reflecting American preeminence within both telecoms and computation, and on the global stage more generally (even within the Soviet Union, computing devices tended to be knock-offs of American models – in a nominally bipolar world, one pole was setting the standards for communications and computation). To work around the limitations of ASCII, various ‘national’ variants were developed, with some characters of the American version swapped for others more important in a given context, such as accented characters or local currency symbols. But this reproduced some of the problems of the ways that Baudot had been extended: certain characters became ‘unsafe’, prone to garbling, while the core roman alphabet remained reliable.

Consider the narrow set of characters one still sees in website domains and email addresses: though some provisions have been made for internationalization on this level, even now, for the most part only characters covered by American ASCII are considered safe to use. Others risk provoking some technical upset, for the deep infrastructural layers laid down by capital and state at the peak of American dominance are just as monoglot as most English-speakers. Like the shift-based extensions to Baudot, over time, various so-called ‘extended ASCII’ standards were developed, which added an extra bit to provide for the characters of other languages – but always with the English set as the core, and still reproducing the same risk of errors if one of these extended variants was mistaken for another. It was these standards in particular which could easily get mixed up in the first decades of the web, leading to frequent mojibakes when one strayed into the precarious terrain of non-English text.

Still, reflecting the increasing over-growth of American tech companies from the national to the global market in the 1980s, an initiative was launched in 1988 by researchers from Xerox and Apple to bring about a ‘unique, unified, universal’ standard which could accommodate all language scripts within its 16 bits and thus, effectively, solve these encoding problems once and for all. If one now retrieves from archive.org the first press release for the Unicode Consortium, which was established to codify the new standard, it is littered with mojibakes, having apparently endured an erroneous transition between encodings at some point.

The Consortium assembled leading computing companies who were eyeing global markets; its membership roster since then is a fairly accurate index of the rising and falling fortunes of American technology companies: Apple, Microsoft and IBM have remained throughout; NeXT, DEC and Xerox were present at the outset but dropped out early on; Google and Adobe have been consistent members closer to the present. But cold capitalist calculation was at least leavened by some scholarly enthusiasm and humanistic sensibility as academic linguists and others became involved in the task of systematizing the world’s scripts in a form that could be interpreted easily by computers; starting from the millennium, various Indian state authorities, for example, became voting members of the consortium as it set about encoding the many scripts of India’s huge number of languages.

Over time, Unicode would even absorb the scripts of ancient, long-dead languages – hence it is now possible to tweet, in its original script, text which was originally transcribed in the early Bronze Age if one should so desire, such as this roughly 4,400 year old line of ancient Sumerian: ‘? ??? ???? ? ?? ????? ??’ (Ush, ruler of Umma, acted unspeakably). It must surely be one of the great achievements of capitalist technology that it has at least partially offset the steamrollering of the world’s linguistic wealth by the few languages of the dominant powers, by increasingly enabling the speakers of rare, threatened languages to communicate in their own scripts; it is conceivable that this could save some from extinction. Yet, as edifying as this sounds, it is worth keeping in mind that, since Unicode absorbed ASCII as its first component part, its eighth character () will forevermore be a signal to make a bell ring on an obsolete teletype terminal produced by AT&T in the early 1960s. Thus the 20th Century dominance of the American Bell system remains encoded in global standards for the foreseeable future, as a sort of permanent archaeological layer, while the English language remains the base of all attempts at internationalization.

Closer to the present, the desire of US tech companies to penetrate the Japanese market compelled further additions to Unicode which, while not as useless as the Teletype bell character, certainly lack the unequivocal value of the world’s endangered scripts. Japan had established a culture of widespread mobile phone use quite early on, with its own specificities – in particular the ubiquitous use of ideogrammatic emoji (絵文字: ‘picture character’; this is the same ‘moji’ as that of mojibake). A capacity to handle emoji was thus a prerequisite for any mobile device or operating system entering the market, if it was to compete with local incumbents like Docomo, which had first developed the emoji around the millennium. Since Docomo hadn’t been able to secure a copyright on its designs, other companies could copy them, and if rival operators were not to deprive their customers of the capacity to communicate via emoji with people on other networks, some standardization was necessary. Thus emoji were to be absorbed into the Unicode standard, placing an arbitrary mass of puerile images on the same level as the characters of Devanagari or Arabic, again presumably for posterity. So while the world still struggles fully to localize the scripts of websites and email addresses, and even boring characters like apostrophes can be caught up in encoding hiccups, people around the world can at least communicate fairly reliably with Unicode character 1F365 FISH CAKE WITH SWIRL DESIGN (?). The most universalistic moments of capitalist technology are actualized in a trash pile of meaningless particulars.

1 Bit: a contraction of ‘binary digit’; the smallest unit of information in Claude Shannon’s foundational information theory, representing a single unit of difference. This is typically, but not necessarily, represented numerically as the distinction between 0 and 1, presumably in part because of Shannon’s use of George Boole’s work in mathematical logic, and the mathematical focus of early computers. But the common idea that specifically numeric binary code lies at the heart of electrical telecoms and electronic computation is something of a distortion: bits or combinations of elementary units of difference can encode any information, numerical or other. The predominant social use of computing devices would of course prove to be in communications, not merely the kinds of calculations that gave the world ‘Little Boy’, and this use is not merely overlaid on some mathematical substratum. The word ‘bit’ might seem an anachronism here, since Baudot’s code preceded Shannon’s work by decades, but the terms of information theory are nonetheless still appropriate: Baudot used combinations of 5 elementary differences to represent all characters, hence it is 5-bit. This meant that it could encode only 31 characters in total: if a single bit encodes a single difference, represented numerically as 0 or 1, each added bit multiplies by 2 the informational capacity of the code; thus 25 = 32, though we are effectively counting from 0 here, hence 31. Historically, extensions to the scope of encoding systems have largely involved the addition of extra bits.

Read on: Rob Lucas, ‘The Surveillance Business’, NLR 121.